Speech Analytics - It's all about sampling.

Wednesday, March 25, 2015

Speech Analytics is a great sampling tool, but a flawed measurement tool.

NSA - Looking for Outliers

The NSA uses speech analytics to sample vast amounts of speech. They use super-computers to collect and sift through huge amounts of data to find a few instances in which there may be meaningful intelligence. Intelligence which helps them to focus on potential sources of threat to US interests. They are searching for highly valuable outliers for further investigation. They have the budget to sift through a sea of false positives gleaned from an ocean of low probability events.

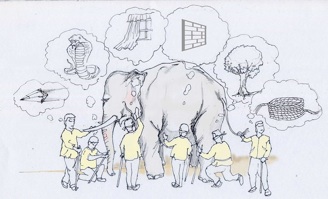

Sound Concepts

Humans hear (or read) words in a context and construct meaning. Words morph into concepts. Computers process sounds into phonemes and try to produce words. Sounds become words. These are very different processes. It's important to understand that speech analytics is about producing text which can be searched for words.

Speech analytics translates sounds to text, resulting in searchable text documents. The transformation of sounds into text introduces inaccuracies. The fidelity of the sound file and the pronunciation of the words (think accents and enunciation) effect the accuracy of the translation. Phone conversations using low fidelity, speaker independent, LVCSR (large vocabulary continuous speech recognition) are error prone.

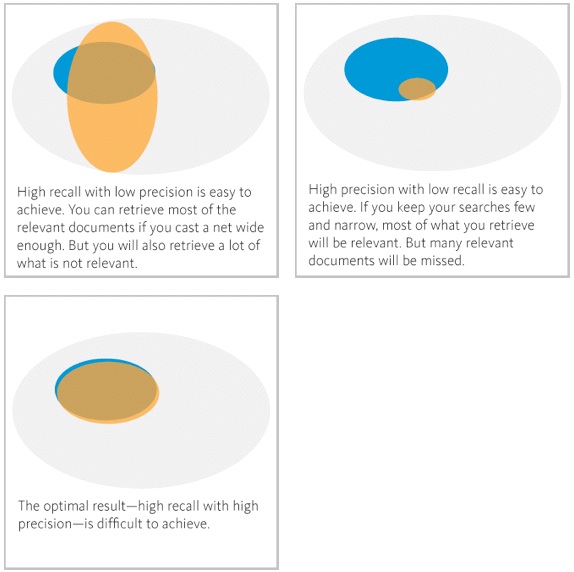

Recall-Precision Tradeoff

'As an example, if there are 100 files searched for specific words, which occur in 60 of them, then if there are 30 'hits' returned - all of which contain the word or phrase - that is measured as 100% precision, and 50% recall.' Source: Calabio.com

Example of Recall Precision Tradeoff

The gray oval represents all documents in the population; the blue oval is the set of relevant documents; the orange oval represents the documents retrieved by your search or assessed as relevant by the review process.

That last point bears repeating: the optimal result - high recall with high precision - is difficult to achieve. As it turns out, very difficult. And in our experience, it cannot be achieved through search technology alone. It also cannot be achieved through manual review alone. Finding just the necessary information and little else is no easy task, but it can be done through an optimal combination of technology, process and human expertise. It is the intersection of these three components, with the human expertise driving the appropriate application of technology and process, that enables simultaneously high recall and high precision.

Source: Document Review Accuracy: The Recall-Precision Tradeoff

Posted by Jeff Kangas on Fri, Aug 17, 2012 @ 02:15 PM

Used in Sampling

Combined with call metadata, speech analytics can be an effective sampling tool.

Let's say we want to get a sample of 400 call recordings from 1000 calls coming into a call center's retention queue. We specify the sample should only include calls that resulted in churn, and included mention of a specific 'competitor.'

The hypothetical speech analytics engine finds 400 calls. 360 or 90% include mention of the competitor, 40 or 10% don't.

This means that there is a 10% chance of false positives. These calls can then be reviewed by an analyst to harvest useful intelligence about the root causes of churn. The analyst can simply exclude the false positives from the final statistics.

Measuring Change

If we ask the speech analytics software to measure the percentage of the calls that churned, and had the 'competitor' mentioned, we have to account for recall error. This is an entirely different matter.

How accurate is the resulting statistic, and if accurate, does it help us to find remediations?

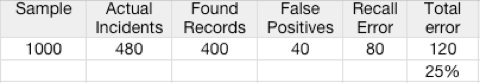

Let's say the hypothetical sample had 480 actual hits.There was a 10% chance of false positives and let's say a 20% chance of recall error.

Of the 1000 calls reviewed, there were actually 480 hits. We were presented with 360 hits. That's 120 misses of 480 possibles, or a 25% error rate.

Unfortunately the error rate isn't constant. It would vary from month to month due to differences in the sample.

Now we have to decide what action to take. Should we reduce price, increase the amount spent on retention marketing, give agents tools to address the competitors weaknesses, etc.? Or should we take no action?

Given the unknowns (causes), inaccuracies, and variability between samples, speech analytics is not very good at measuring change over time.

Call Intelligence - Better by Design

Call Intelligence Inc. is a Transactional Intelligence and Design Consultancy with over 24 years helping our clients to better understand and improve the outcomes of interactions with their prospects and customers.

Our analyses allow us to map, correlate and prioritize thousands of possible relationships between marketing programs, call handling systems, calls, callers, call components, concerns, agents, competition, etc...and outcomes.